Venturing into Neural Nets

Written by: Elena Montes

The Task at Hand

As part of an honors reading course last spring, I dove into natural language processing (NLP) and neural networks. This blog will chart my progress through a great paper by Dr. Yoav Goldberg (Goldberg, Y. A Primer on Neural Network Models for Natural Language Processing. Journal of Artificial Intelligence Research, 57. 345–420) and other supplemental materials, but I hope for much more than that. My real hope is that by immersion into the topic, I will create a collection of valuable resources for any other beginner who wishes to learn about these concepts.

Since the start of my computer science studies, I have been fortunate to learn about the foundations of this field. However, this semester marks my initiation into more foreign territory. In a way, I am branching off from the core curriculum in order to pursue more specialized knowledge. Indeed, much of the research is fast-paced and ongoing; my supervising professor Dr. Christan Grant mentioned to me, new papers are published nearly all the time, generating almost too much information to keep up with.

Regardless, my task is clear, so let’s get started. To understand how neural nets can be used for natural language processing, we must first build a foundational knowledge of what those two general concepts are.

(Note: this is a very broad overview of general concepts. There is a lot more detail to be found on differing techniques and implementations.)

Neural Networks

Artificial neural networks — as futuristic as that phrase sounds — originated as a concept back in the 1940s, thanks to Warren S. McCulloch and Walter Pitts (McCulloch, W. S. and Pitts, W. A Logical Calculus of the Ideas Immanent in Nervous Activity. Bulletin of Mathematical Biophysics 5. 115–133). Although many of the intricacies of the brain are mysterious to this day, researchers still understood its behavior quite well. In fact, although the underlying electrical processes might differ significantly, a computer could very well imitate the learning process of the human brain. Thus began research into neural networks, computer simulated models of brain activity.

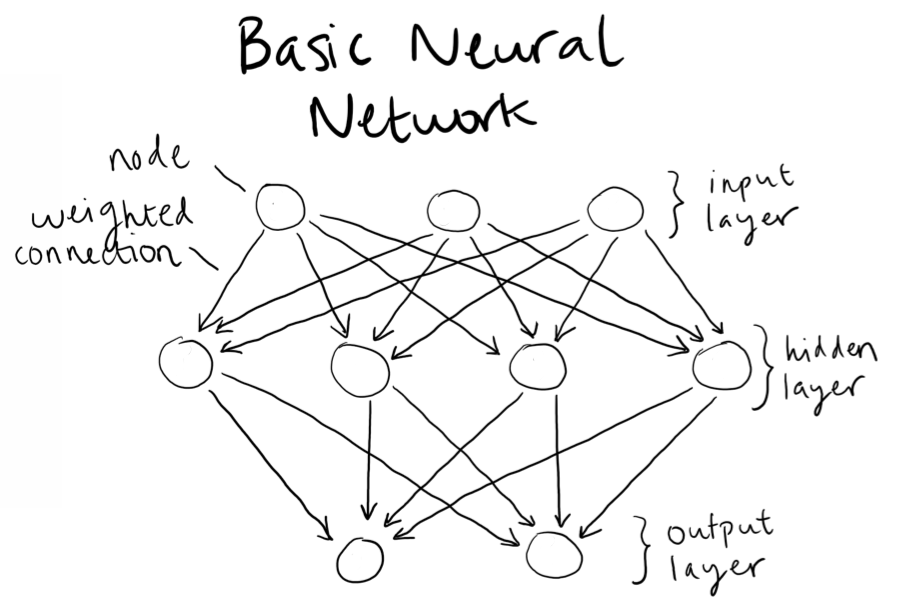

A neural network consists of two main components: processing units modeled after neurons and weighted connections that act as synapses between these units.

The general structure for a typical neural network is shown in the figure below. Inputs enter the network through a series of nodes. These inputs are then weighted and propagated through a series of hidden layers before finally resulting in an output layer of one or more nodes. Circles represent processing units, while arrows represent weighted connections (Ingrid Russell, University of Hartford. Definition of a Neural Network).

Different types of processing units exist for different purposes, and a concept called an activation function is involved in propagating the input through the network. But for now, it suffices to understand the basics — we’ll dive into specifics in future chapters.

Natural Language Processing

The concept of natural language processing is deceptively simple: it refers to computational processing of human language (Ann Copestake, University of Cambridge. Natural Language Processing). Essentially, how do you make a computer understand what a human tells it in English, Spanish, etc?

You may have seen NLP work its magic without even realizing it; if you’ve ever used a language translation software to help with your French homework or dictated aloud a text to your phone, you likely have underlying NLP software to thank.

The high ambiguity, subtlety, and variability embedded in the structure of human language makes deciphering intent difficult for a computer. If we were all hard-wired to specify a request in only one way, there might not be so much difficulty.

For example, note all the phrases I just thought of to describe the same intent:

- “Could you pass the bag of pretzels?”

- “Pass the pretzels, please.”

- “Hand over the bag of pretzels, would ya?”

- And of course, the passive aggressive “Sure would be nice to have some pretzels…”

(As a side note: how on earth do we train a computer to recognize sarcasm? A question for another day.)

You see the point. There are many more specific sub-topics that make up the realm of NLP, but we’ll leave those for another day.

The Great Combination

Now that you have an essential understanding of what neural nets and NLP are, it becomes more obvious why combining the two is a good idea. Humans’ brains clearly have a developed capability to interpret and produce natural language for the purposes of communication. So what better way to give this ability to a computer than using neural nets, which are modeled after the very organ that invented the concept of language in the first place?

Dr. Goldberg’s A Primer, Section 1

In addition to a brief abstract and introduction, Dr. Goldberg begins by highlighting key vocabulary and mathematical notation necessary to fully understand the text to follow.

The paper assumes a knowledge of matrices and matrix multiplication. Follow those links for quick tutorials.